NMF is a well-known problem in math, statistics, optimization, matrix computation, machine learning, and etc. because of being NP-hard and its importance when it comes to application.

What is NMF?

NMF as it can be implied from its name, is to express an original matrix \(W\) as the multiplication of two low rank matrices, say \(Q\times R\) such that they can approximate the matrix \(W\) with minimum error with preserving nonnegative entries.

Here is the formal definition, suppose that we want to approximate \(W \subset \mathbb{R}^{n\times m}\), with multiplication of \(Q \subset \mathbb{R}^{n\times k}\) and \(R \subset \mathbb{R}^{k\times m} \), and \(k << \min(m,n)\) according to the following minimization,

What does it do?

At first glance, NMF just approximates a matrix with two low-rank matrices (here by low-rank, we mean the number of rows or columns are much less than the original matrix), then each of these two matrices could be interpreted based on different purposes related to our application. In this post, I'm targeting clustering that's a crucial problem in machine learning.

Wait, what’s clustering?

Simply grouping the similar objects together is the ultimate goal of clustering. This is an important tool in several different fields, while is an appealing mathematical problem at the same time! Clustering is an unsupervised learning.

What’s an example of clustering?

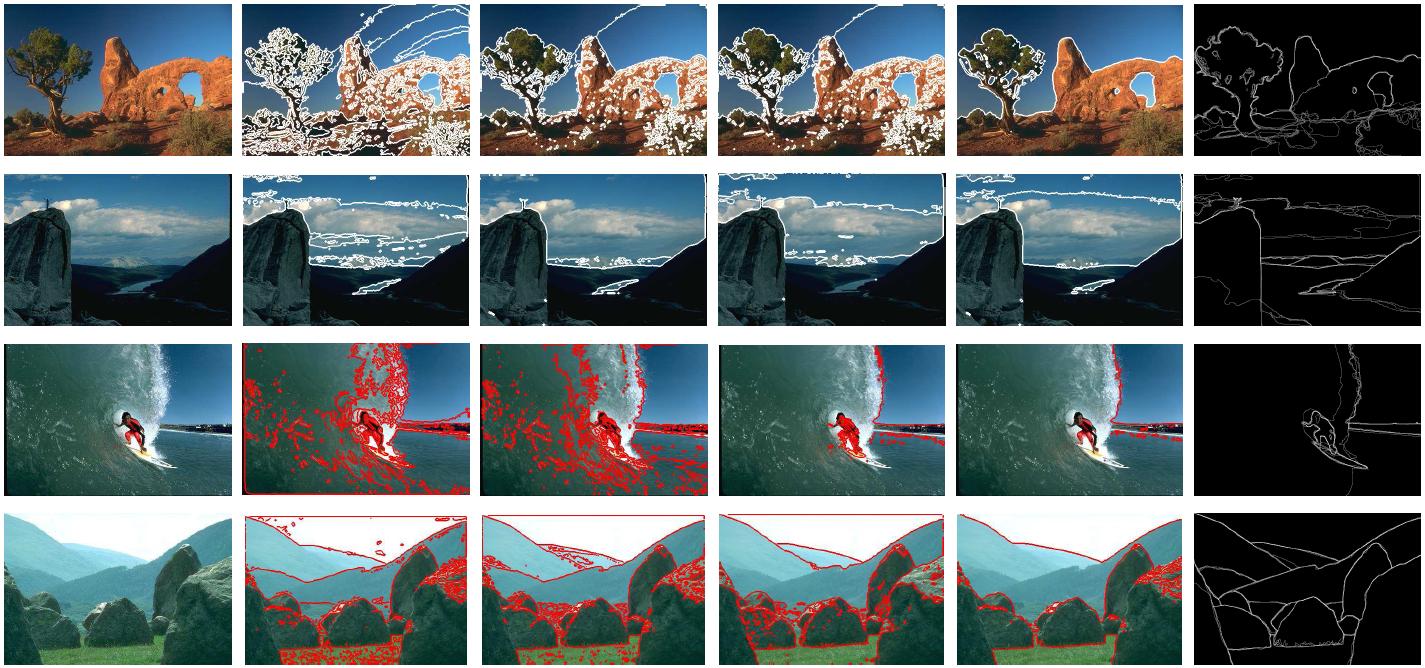

Suppose that you come across a bunch of pictures and you wanna determine how many objects exist there, so clustering can help you discovering those objects according to the similar pixels.

How NMF can find clusters?

Good question! We already introduced the NMF and talked about clustering, but where is the connection between these two notions? If you sort the columns of the original data \(W\) such that the data belonging to the same cluster, stacking over each other, then it is easy to see that each cluster has a particular pattern. In this regard, a low rank matrix can represent all those pattern, called cluster pattern or cluster centre. The first low-rank matrix \(Q\) could be considered as a cluster centre. So what's the point of the second one? If we have the cluster centres, how can we determine the membership of each data which identifies the grouping? Yes! the column-wise \( \texttt{argmax} \) of the second matrix is designed for this purpose. So that's all! Now you can use a solver to factorize your data in the NMF fashion to find out your cluster.

I suggest CVX library in the following link to apply NMF on your data.

Nonnegative matrix factorization (nonneg_matrix_fact.m)

Wait, what’s clustering?

Simply grouping the similar objects together is the ultimate goal of clustering. This is an important tool in several different fields, while is an appealing mathematical problem at the same time! Clustering is an unsupervised learning.

What’s an example of clustering?

Suppose that you come across a bunch of pictures and you wanna determine how many objects exist there, so clustering can help you discovering those objects according to the similar pixels.

How NMF can find clusters?

Good question! We already introduced the NMF and talked about clustering, but where is the connection between these two notions? If you sort the columns of the original data \(W\) such that the data belonging to the same cluster, stacking over each other, then it is easy to see that each cluster has a particular pattern. In this regard, a low rank matrix can represent all those pattern, called cluster pattern or cluster centre. The first low-rank matrix \(Q\) could be considered as a cluster centre. So what's the point of the second one? If we have the cluster centres, how can we determine the membership of each data which identifies the grouping? Yes! the column-wise \( \texttt{argmax} \) of the second matrix is designed for this purpose. So that's all! Now you can use a solver to factorize your data in the NMF fashion to find out your cluster.

I suggest CVX library in the following link to apply NMF on your data.

Nonnegative matrix factorization (nonneg_matrix_fact.m)

This comment has been removed by the author.

ReplyDeleteStill a bit vague, but thanks for sharing the idea. Here, is the R package http://cran.r-project.org/web/packages/NMF/NMF.pdf

ReplyDeleteThanks for the R package!

DeleteBasically, NMF is an NP-hard problem, suppose a diagonal matrix \(D\) with nonnegative entries, so \(QD^{-1}DR\) would produce the same factors with infinite solutions! Moreover, Frobenius norm to measure the error is just one possibility, multi purpose Bregman divergence, for instance, KL-divergence could be another alternative to compute the error. Each error measurement, results in different factors.

ReplyDeleteStodden and Dunoho in 2003, suggested separability assumption to consider few columns of the original matrix as matrix \(Q\), and Sanjeev Arora in 2012, found this idea interesting and he showed its theoretical aspects which is polynomially provable.

If you would like to read more descriptions, here is my brief presentation that I made 2 years ago and I presented it again in GERAD few weeks ago,

http://www2.ift.ulaval.ca/~quimper/Seminaires/files/Mohammad_Seminar.pdf

Additionally, I arranged my former proposal based on this topic so feel free to drop me a line if you have any questions or comments.